It is obvious what everyone is looking for, so I won’t attempt to define it.

Since so many others have done it before me, and you’ll never guess, there are various meanings. Everyone in the IT sector is discussing it. There is a high demand for DevOps engineers, and businesses are scrambling to fill their ranks. Some say it’s because of automation, while others say it’s because of culture. Both of these claims are contradictory.

So, if it’s automation, system administrators have been doing it since the dawn of time, using scripting languages and other tools in the process. Still, we referred to it as “automation” rather than “DevOps.” You may then claim that it has to do with culture, but if so, why are there so many automation tools? You could think I’m confusing you right now, but believe me — I’ll prove a few things later. Just keep reading.

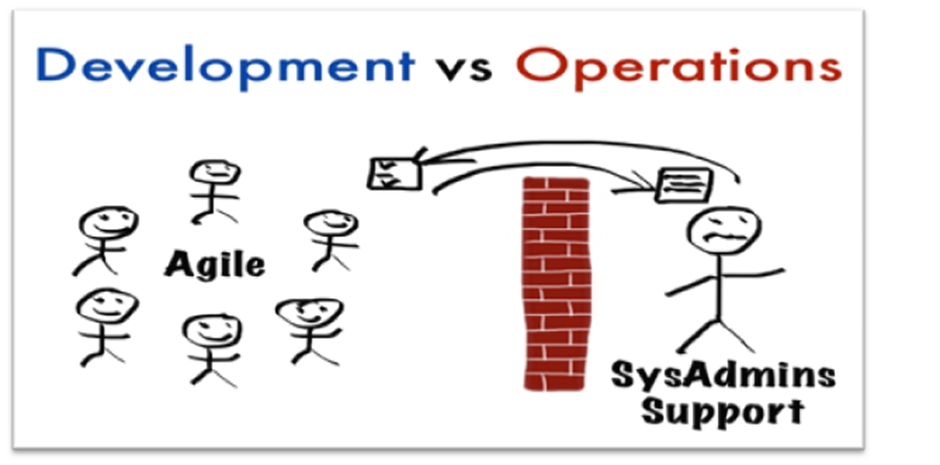

If you are reading this, you must be involved with the software business. The software industry’s only objective is to produce and provide software to users. The software industry has been split into two segments from the beginning, and those two segments are development and operations. Software creation and testing are the main goals of development. Delivering such software to users through a website or as installable software is the main emphasis of operations. Once the program is up and running, we update it, add new features for our users, and ensure it keeps working right.

Job Roles and Their Mission

We have many jobs in development, such as developers, software testers (QA), database developers, and architects. The goal is to swiftly build all the best and newest features for the program.

Roles in operations include system administrators, cloud engineers, database administrators, and security specialists. Here, maintaining continuous system availability is the goal. Systems where the software is stored, such as servers hosting your websites and databases.

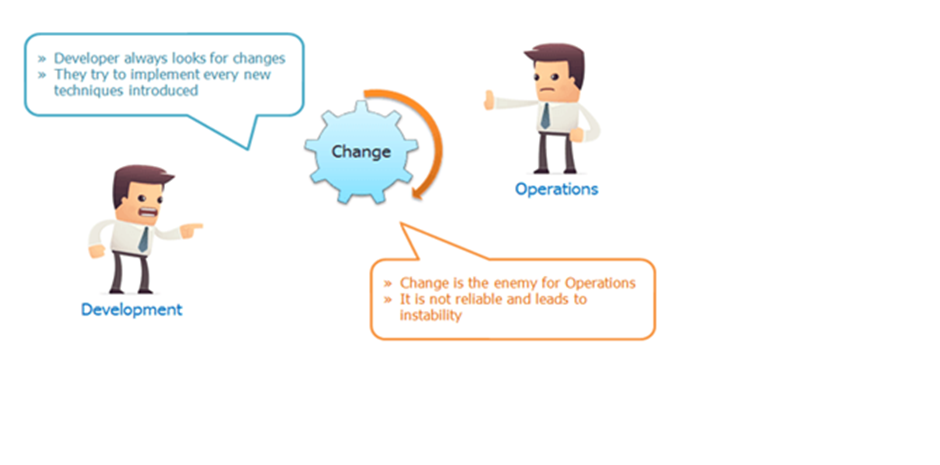

You would have understood by now that both parties have different aims and goals. One focuses on quick change, and the other concentrates on stability. These are poles apart; if we make rapid changes (continuously adding new features), stability becomes an issue. A system that changes constantly will have problems with stability. It’s also true the other way around.

Our software and applications are constantly changing in the environment we live in. Think about this: if a new program comes out with the best features while you are still using an old one, you will switch to the new one. This means that the Dev and Ops teams of a company risk losing money if they don’t give you the newest and most stable features.

So far, I have established a few points that I will list below:

· Developers aim to create the latest features quickly and rapidly.

· Operation aims to keep systems stable.

· Quick changes are the user demand.

· User also needs stable software or Apps.

The main goal of DevOps is to give users the newest and best features with stability.

It’s not just about creating new features; it’s also about delivering those features to the user; otherwise, what’s the point of creating if we cannot deliver it on time? So how does DevOps solve that problem? To understand it, first, we need to understand the development procedure, and then we will focus on the operations.

Software Development Processes

The development process is explained in detail in later chapters. Here, we will keep it to a minimum. The development team uses some software development models to create the software. In simple terms, these software development models are rules everybody follows to get things done.

Waterfall Model

There is the waterfall model, which is a traditional model and does not fit well in today’s fast-moving world. In the waterfall model, the following phases are followed in order:

· System and software requirements: captured in a product.

· requirements document.

· Analysis: resulting in models, schema, and business rules.

· Design: resulting in the software architecture.

· Coding: the development, proving, and integration of software.

· Testing: the systematic discovery and debugging of defects.

· Operations: the installation, migration, support, and

· maintenance of complete systems.

So, the waterfall model says that you shouldn’t move on to the next phase until the phase before it has been reviewed and checked. This model is good for the operations team, as they get the whole software developed at once, which they can deploy and maintain. New changes will also be less frequent, which will not put as much burden on the Operations team. But this model does not scale well with the current fast-moving world. This model has so many downsides, and most of the development is happening with the Agile model now.

Agile Model

An agile model develops software in small iterations instead of full software. The entire product’s feature list is divided into multiple lists of features. For example, if software has 50 features, we can create 5 lists of 10 features each. Now developers will work on 10 features at a time, develop and deliver those 10 features in the first iteration and continue with the rest of the features until you get the final product.

Every iteration involves cross-functional teams working simultaneously on various areas such as:

· Planning.

· Requirements Analysis.

· Design.

· Coding.

· Unit Testing and

· Acceptance Testing.

Now we are talking about creating and delivering it to different environments like Dev, QA, Staging, UAT and Prod. This puts a lot of pressure on the Operations team since they have to keep implementing these changes across multiple environments. By the way, these different environments are just some groups of servers owned by other teams, like QA, which is owned by software testers who test the software.

The general approach developers take is that once they are done creating a new feature, they will send a procedural document to the operations team explaining how to deploy it. Developers test it on their machines, and should also work in production. But production systems are built differently, with multiple servers for web, database, and backend services that are protected by a firewall and the Network Access Control List (NACL). A stable OS and software with a different version of the developers’ systems would exist. Development servers are just one computer, and most of the time, all the services are put on one server.

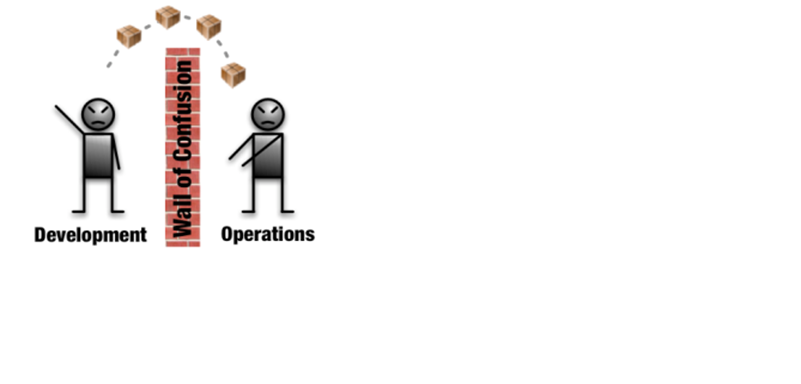

The Problem

After following the procedural document and having their own skills and knowledge, operations will deploy it to production. This is where the problem comes in; the deployment may fail, failing the entire service. This happened because of a lack of communication between the Dev and Ops teams. Dev does not understand the Ops part; the reverse is also true. So, Ops feel that frequent changes like that may break the system, and Dev thinks there is too much restriction on delivering the latest changes. We also need to think about security here. Security testing is done before it goes into production. This entire delivery procedure is slow and manual most of the time.

Think about quick changes. Now, the agile model is not helping the operations team deliver the code faster. So, no matter how agile development is, operations are still waterfall. If you think about it for a while, you will understand that it’s not a technological problem but a cultural one. Both segments of the software industry have distinct cultures. If this culture is not changed, we cannot deliver better features quickly to the users.

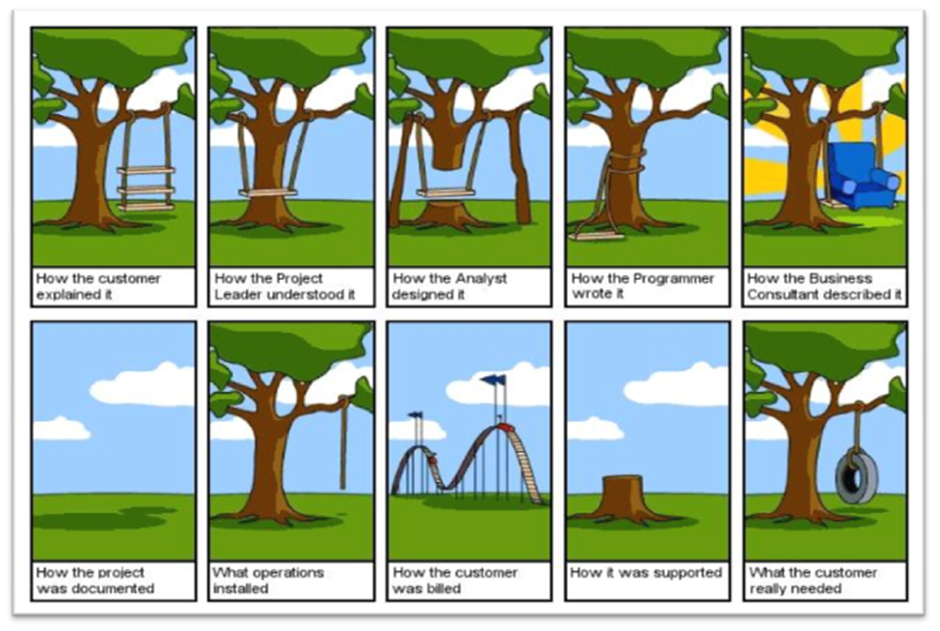

A Very famous joke describes the communication problem:

Implementing DevOps

DevOps solves this problem by changing the culture and making it one culture: Dev+Ops. There would be one team, DevOps, with one goal: rapid delivery with stability.

But How.?

The first step is to establish communication and collaboration between Dev and Ops. Dev must understand the Ops part, and Ops must understand the development procedure. DevOps is the practice of having operations engineers and development engineers work together throughout the entire service lifecycle, from designing to developing to supporting production. In waterfall and agile lifecycles, we have seen that the development and operations teams are separate; they work separately in their own silos and have very different mottos.

DevOps Lifecycle

DevOps lifecycle includes development and operations teams working together. As developers work on their agile iterations, Operations must work on setting up systems and automating the deployment procedure. Automation is the key factor here because the agile model gives code repeatedly to deploy it on systems, which will be the continuous release of code, and that must be continuously deployed to many servers in Dev, QA, Staging & Production environments.

If the code deployment process is not automated, then the ops team must manually do the deployment. Deployment may include below mentioned procedures:

· Create servers if they don’t exist (On the cloud or virtual environment).

· Install and set up prerequisites or dependencies on the server.

· Build the software from raw source code (if not done yet, developers).

· Deploy software to servers.

· Do config changes to OS and software.

· Setup Monitoring.

· Feedback & Report.

NOTE- All the above processes may be less or more depending on the kind of deployment.

When we get a new code change, it must be deployed to production or at least staging servers.

For this, all the processes must be automated, we must automate the first Build and release process, which includes.

● Developers push code to a centralized location.

● Fetch the developer’s code.

● Validate code.

● Build & test code.

● Package it into a distributable format (software or artifacts).

● Release it.

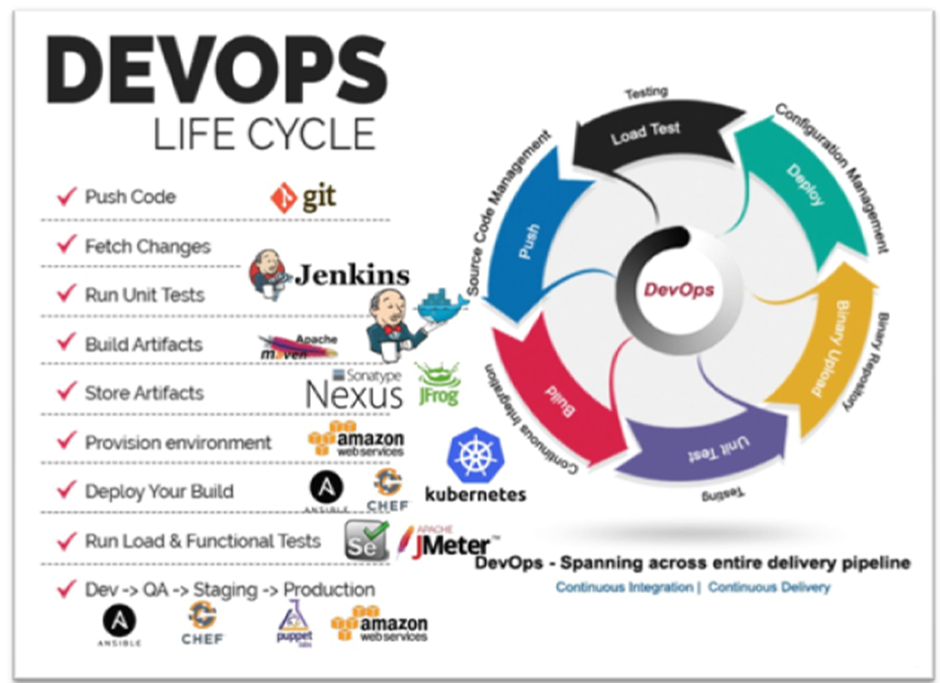

The next phase is to deploy this released software to servers, which we have already discussed. Combining this Build & Release process with the Deployment process gives us the DevOps Lifecycle, which is fully automated.

DevOps engineers must automate all the above processes; it should be so seamless that when developers push their code to a central repository, it should be fetched, run through all the above methods, and sent to production systems. “From code to a product,” as I say.

What Is Continuous Integration?

Developers will push their code several times a day to a central repository; every time a code changes, it should be pulled, built, tested, and notified. Because the code will be changing regularly, we must repeat these steps. That’s why it’s called continuous integration. We have a separate chapter for this, where it will be discussed in detail. As of now, you can understand from the diagram that from step 1 to step 4 is CI.

What Is Continuous Delivery?

After CI, we should also be able to deliver our code changes to all the servers in different environments like Dev, QA, and Staging. It should be automatically delivered to QA servers, where testers will do functional tests, load tests, etc. After it passes, the QA tests should automatically provide the code to a staging area where a customer or some set of users can check the changes and approve deploying it to production.

As Per Wikipedia

Continuous delivery and DevOps are often confused because their meanings are similar but they represent two different concepts. DevOps has a broader scope and centers around cultural change, specifically the collaboration of the various teams involved in software delivery (developers, operations, quality assurance, management, etc.), as well as automating the processes in software delivery. Continuous delivery, on the other hand, is an approach to automate the delivery aspect and focuses on bringing together different processes and executing them more quickly and frequently. So, continuous delivery can lead to DevOps, and CD leads right to DevOps.

What Is Continuous Deployment?

If the approval is a manual process, then code delivery is continuous delivery. But if the approval process becomes automated, then after staging, the code changes is done directly to production systems. This is called “continuous deployment."

The DevOps and Software Development Life Cycles are below:

● Check in code.

● Pull code changes for the build.

● Run tests (continuous integration server to generate builds & arrange releases): Test individual models, run integration tests, and run user acceptance tests.

● Store artifacts and build a repository (repository for storing artifacts, results & releases).

● Deploy and release (release automation product to deploy apps).

● Configure the environment.

● Update databases.

● Update apps.

● Push users — who receive tested app updates frequently and without interruption.

● Application & Network Performance Monitoring (preventive safeguard).

● Rinse and repeat.

The above process is also called a Code Delivery Pipeline.

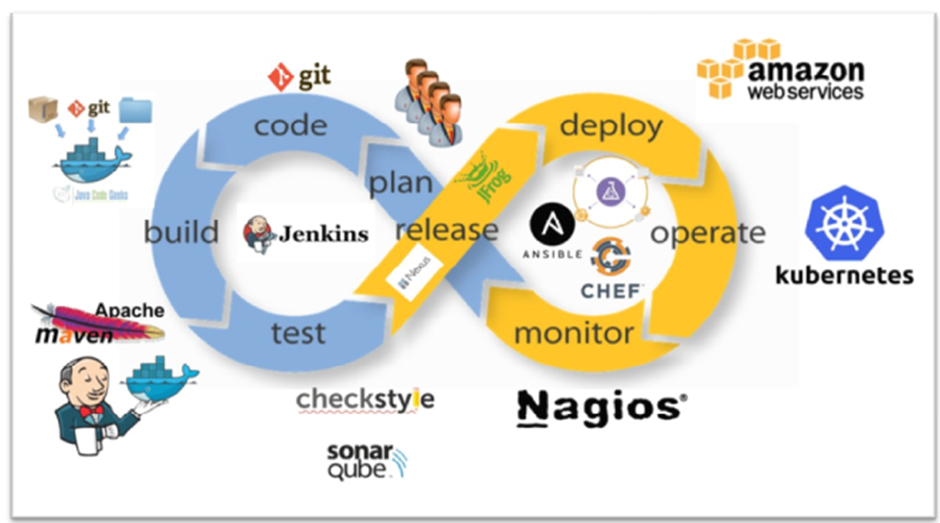

We have discussed earlier that everything starts with communication and collaboration between Dev and Ops. Once we understand the culture and process of the project/product, we can begin working with everyone in designing the code delivery pipeline. We must decide what automation tools to use to create an entire pipeline. We need to decide where our infrastructure will be hosted. On the cloud, virtual machines, or physical machines? In today’s world, we have a lot of automation tools, but first, we need to understand their categories: what tools are used for what purpose? If we don’t understand that, then we won’t be able to decide where to use them in our code delivery pipeline.

Version Control Systems:

It is used to store the source code, a central place to keep all the code and tracks its version.

For Example:

· Git

· SVN

· Mercurial

· TFS

Build Tools:

The build process is where we take the raw source code, test it and build it into the software. This process is automated by build tools.

For Example:

· Maven

· ANT

· MSBuild

· Gradle

· NANT

Continuous Integration Tools:

● Jenkins

● Circle CI

● Hudson

● Bamboo

● TeamCity

Configuration Management Tools:

Also known as automation tools, can be used to automate system-related tasks like software installation, service setup, file push/pull etc. Also used to automate cloud and virtual infrastructure.

For Example:

· Ansible

· Chef

· Puppet

· Saltstack

Cloud Computing:

Well, this is not any tool but a service accessed by users through the internet. A service that provides us with computing resources to create virtual servers, virtual storage, networks etc. Few providers in the market give us public cloud computing services.

For Example:

· AWS

· Azure

· Google Cloud

· Rackspace

· DigitalOcean

Monitoring Tools:

It is Used to monitor our infrastructure and application health. It sends us notifications and reports through email or other means.

For Example:

· Nagios

· Sensu

· Icinga

· Zenoss

· Monit

Containers & Microservices:

Well to be very frank this cannot be described to you right now. It’s described in detail in a separate chapter. We need to have a lot of Infra & Development knowledge to understand this category of the tool.

For Example:

· Docker

· RKT

· Kubernetes

DevOps Lifecycle with DevOps Tools

Summary:

· DevOps cannot be defined in one sentence. DevOps is the culture and also the implementation of automation tools. It depends on which area you are defining it.

· Majority of the Software development is happening with the Agile model and which churns out code changes incrementally and frequently.

· Operations does not gel well with the Agile team as both have differences in their principles.

· Agile team wants quick change; Ops wants to keep the system stable by not making frequent changes.

· DevOps helps in creating communication, collaboration and integration between Dev and Ops and culture, practices and tools level.

· DevOps engineers must understand the DevOps lifecycle and implement the right tool of automation at the right place.

Learning automation at every level of the lifecycle is essential if you want to make a career in the DevOps domain. It would help if you understood Infrastructure, Development & Automation.